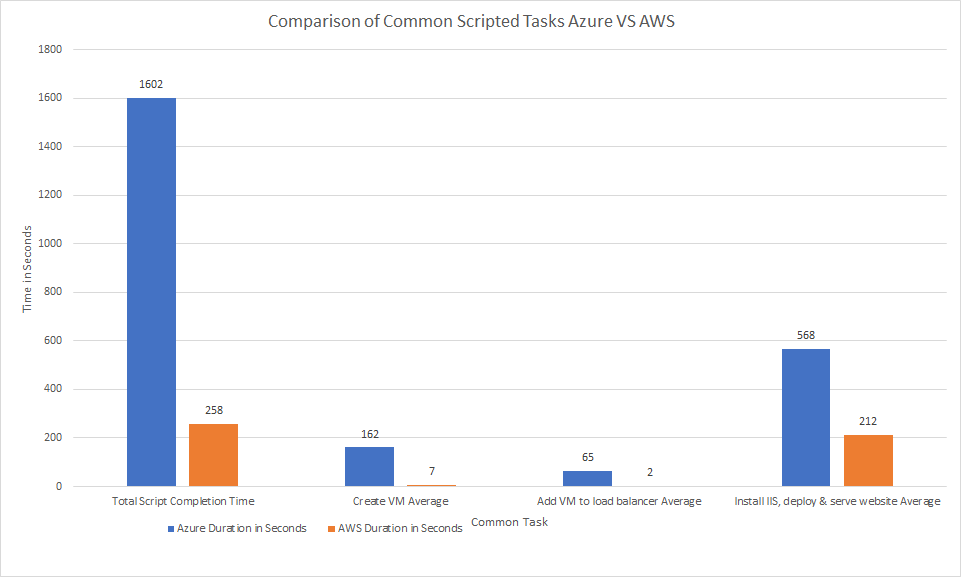

As a follow on to my script that deploys a cluster of two load balanced Windows servers, installs IIS, and deploys a website for Azure, I created a similar script to do so in AWS. A few things of note that I feel makes AWS’s script better.

- Certain tasks are non blocking and do not wait for the action to complete. I added wait states in the script to make sure time comparisons are true.

- AWS actions are much faster. On average in my script it takes Azure 65 seconds to add a VM to a load balancer where in AWS its an average of 2 seconds.

- AWS’s CLI allows for multiple instance IDs to be provided per command to increase efficiency even though my script doesn’t really take advantage of this which provides a more true comparison since I don’t think Azure’s CLI or PowerShell module allows for this.

Here is the script:

#This script creates a number of Windows VMs, installs IIS, a simple webpage, and places them behind a load balancer

#run this if needed

#aws configure

function elapsedTime {

$CurrentTime = $(get-date)

$elapsedTime = $CurrentTime - $StartTime

$elapsedTime = [math]::Round($elapsedTime.TotalSeconds,2)

Write-Host "Elapsed time in seconds: " $elapsedTime -BackgroundColor Blue

}

#Captures start time for script elapsed time measurement

$StartTime = $(get-date)

#Sets "Constants" to be used to create VMs

$imageID = "ami-0182e552fba672768" #Amazon's provided windows 2019 datacenter base

$subnet = "subnet-000000000" #my subnet for us-east-2a

$securityGroup = "sg-00000000" #My network security baseline

$instanceType = "t2.medium" #Instance size, 2 vCPUs, 4 GB RAM

$keyPair = "ServerHobbyist" #Keypair to retreive administrator password

$instanceName = "WebWinApp" #sets base name

$class = "disposable" #sets class tag as disposable for easier identification and cleanup

#creates load balancer

$lbName = "WinWebLB1"

Write-Host "Creating Loadbalancer $($LbName)"

aws elb create-load-balancer `

--load-balancer-name $lbName `

--listeners "Protocol=HTTP,LoadBalancerPort=80,InstanceProtocol=HTTP,InstancePort=80" `

--subnets $subnet `

--security-groups $securityGroup

aws elb add-tags --load-balancer-name $lbName --tags Key=Class,Value=$class #tags elb with disposable class

aws elb configure-health-check --load-balancer-name $lbName --health-check Target=HTTP:80/,Interval=5,UnhealthyThreshold=2,HealthyThreshold=2,Timeout=3 #sets a lower threshold for health checks

Write-Host "Load Balancer $($Lbname) created"

elapsedTime

$serverCount = 2 #how many VMs to deploy

$instancesDeployed = New-Object System.Collections.Generic.List[System.Object] #creates array list that will contain instance IDs deployed

for ($i=1; $i -le $serverCount; $i++){

$instanceNameTag = $instanceName + $i

Write-Host "Creating VM $($instanceNameTag)"

$instance = aws ec2 run-instances `

--image-id $imageID `

--count 1 `

--instance-type $instanceType `

--key-name $keyPair `

--security-group-ids $securityGroup `

--subnet-id $subnet | ConvertFrom-Json

aws ec2 create-tags --resources $instance.instances.InstanceId --tags Key=Name,Value=$instanceNameTag #tags instance with name

aws ec2 create-tags --resources $instance.instances.InstanceId --tags Key=Class,Value=$class #tags instance with name

$instancesDeployed.Add($instance.Instances.InstanceId)

Write-Host "VM $($instanceNameTag) created"

elapsedTime

}

Start-Sleep -Seconds 15

#Checks to make sure each instance deployed from above is in a running state, otherwise it can't recieve the IAM role.

foreach ($instanceDeployed in $instancesDeployed){

$instance = aws ec2 describe-instances --instance-ids $instanceDeployed | ConvertFrom-Json

$InstanceTags = $Instance.Reservations.Instances.Tags

$InstanceName = $InstanceTags | Where-Object {$_.Key -eq "Name"}

$InstanceName = $InstanceName.Value

Write-Host "Checking if instance $($InstanceName) is ready to receive IAM role for SSM"

while ($instance.Reservations.Instances.State.Name -ne "running") {

Write-Host "Instance $($InstanceName) not ready. Waiting to check again"

sleep 5

Write-Host "Checking if instance $($InstanceName) is ready to receive IAM role for SSM"

$instance = aws ec2 describe-instances --instance-ids $instanceDeployed | ConvertFrom-Json

}

Write-Host "Instance $($InstanceName) is now ready, assigning role"

elapsedTime

aws ec2 associate-iam-instance-profile --instance-id $instanceDeployed --iam-instance-profile Name=AmazonSSMRoleForInstancesQuickSetup

}

Start-Sleep -Seconds 15

#Checks to make sure each instance deployed from above has the SSM agent. Otherwise commands can't be sent through AWS's orchestration system

foreach ($instanceDeployed in $instancesDeployed){

$instance = aws ec2 describe-instances --instance-ids $instanceDeployed | ConvertFrom-Json

$InstanceTags = $Instance.Reservations.Instances.Tags

$InstanceName = $InstanceTags | Where-Object {$_.Key -eq "Name"}

$InstanceName = $InstanceName.Value

Write-Host "Checking if instance $($InstanceName) has receieved the system management agent"

$ssmTest = aws ssm list-inventory-entries --instance-id $instanceDeployed --type-name "AWS:InstanceInformation" | ConvertFrom-Json

while ($ssmTest.Entries.AgentType -ne "amazon-ssm-agent"){

$ssmTest = aws ssm list-inventory-entries --instance-id $instanceDeployed --type-name "AWS:InstanceInformation" | ConvertFrom-Json

Write-Host "Instance $($InstanceName) has not yet received the SSM agent"

start-sleep -Seconds 5

}

Write-Host "Instance $($InstanceName) has received the SSM agent. Proceeding to next instance or step."

elapsedTime

}

#Installs IIS and deploys website

foreach ($instanceDeployed in $instancesDeployed){

Write-Host "Sending command to install IIS and deploy website on $($instanceDeployed)"

aws ssm send-command `

--document-name "AWS-RunPowerShellScript" `

--parameters commands=['Add-WindowsFeature Web-Server; Invoke-WebRequest -Uri "https://serverhobbyist.com/deployment/index.html" -OutFile "c:\inetpub\wwwroot\index.html"'] `

--targets "Key=instanceids,Values=$($instanceDeployed)" `

--comment "Installs IIS"

Write-Host "Command sent to $($instanceDeployed)"

elapsedTime

}

#adds VMs to load balancer

foreach ($instanceDeployed in $instancesDeployed){

Write-Host "Registering instance $($instanceDeployed) with LB"

aws elb register-instances-with-load-balancer --load-balancer-name $lbName --instances $instanceDeployed #registers instance with load balancer

Write-Host "Registered instance $($instanceDeployed) with LB"

elapsedTime

}

Write-Host "Checking if website is ready to be served from load balancer"

$lbURL = aws elb describe-load-balancers --load-balancer-name $lbName | ConvertFrom-Json

$lbURL = "http://" + $lbUrl.LoadBalancerDescriptions.CanonicalHostedZoneName

$check = $false

while ($check -eq $false){

try {

$check = $true

$result = invoke-webrequest -uri $lbURL -UseBasicParsing -TimeoutSec 20

}

catch {

$check = $false

Write-Host "Website failed to load. Trying again"

Start-Sleep -Seconds 5

}}

Write-Host "Website is now loading at $lbURL"

elapsedTime

Write-Host "Script completed" -BackgroundColor Blue

elapsedTime